세찬하늘

[논문] Attention Is All You Need_트랜스포머 이해(개요 + 구조정)_파트1 본문

🧠 Transformer 개요

Transformer는 2017년 Vaswani et al.이 발표한

논문 *Attention Is All You Need에서 처음 소개된 모델로,

기존 자연어 처리(NLP)에서 주류로 사용되던 RNN과 CNN 기반의

Sequence-to-Sequence 모델의 한계를 극복하기 위해 등장했다.

기존의 RNN 기반 모델(예: LSTM, GRU 등)은 문장을 구성하는 단어들을

순서대로 처리해야 하기 때문에 병렬 처리가 어렵고, 문장의 길이가 길어질수록

앞 단어의 정보가 뒤로 갈수록 희미해지는 장기 의존성(long-range dependency) 문제가 발생했다.

예를 들어 "나는 어릴 적 꿈이 뭐였냐면..." 같은 긴 문장에서,

마지막 단어를 생성할 때 처음 말한 "나는"이 잘 기억되지 않는 문제가 발생한다.

한편, CNN 기반 모델은 병렬 처리가 가능하다는 장점이 있지만,

고정된 커널 크기로 인해 한 번에 볼 수 있는 단어의 범위(= 수용 영역, receptive field)가 제한된다.

이 때문에 멀리 떨어진 단어들 간의 관계를 학습하는 데 어려움이 있다.

예를 들어 "나는"과 "갔다"가 멀리 떨어져 있을 때, CNN은 이 두 단어의 관계를 파악하려면

여러 층을 거쳐야 한다. 이러한 한계를 해결하기 위해 제안된 것이 Transformer 모델이다.

Transformer는 기존의 recurrence(반복 구조)와 convolution(합성곱 연산)을 모두 제거하고,

대신 Self-Attention 메커니즘만으로 문장의 모든 단어 간 관계를 한 번에 계산할 수 있게 하였다.

이 덕분에 병렬 처리가 가능해지고, 멀리 떨어진 단어 간 의존성도 단 한 번의 연산으로

계산할 수 있어 학습 속도와 성능이 크게 향상되었다.

📦 구조 설명 (예시: 영어 → 한국어 번역)

Transformer는 크게 Encoder와 Decoder로 구성된다.

- 예를 들어, 입력 문장이 "I am a student"이고 출력(정답)이 "나는 학생이다"인 경우를 생각해보자.

🔷 1. Input Embedding + Positional Encoding

입력 문장은 단어들을 숫자 벡터로 변환한 임베딩을 거치고,

순서를 알 수 있도록 위치 정보(Positional Encoding)**가 추가된다.

이걸 통해 모델은 "I"가 첫 번째 단어고 "student"가 네 번째라는 순서를 인식할 수 있다.

🔶 2. Encoder: 입력 전체 문맥을 파악

Encoder는 동일한 구조의 블록을 여러 번 반복해서 쌓은 구조이며, 각 블록에는 다음 두 가지가 들어 있다:

- Multi-Head Self-Attention: "I am a student" 내의 단어들이 서로를 바라보며(어텐션)

중요한 관계를 파악한다. 예를 들어 "student"는 "I"와 연결될 수 있다는 걸 학습한다.

🧠 전제: 문장과 단어 임베딩"The cat sat on the mat"

(예: d_model = 512)

✅ 정리Q, K, V 생성 각 단어를 세 가지 관점에서 변환 내적(Q·K) 단어 간 관련성(유사도) 계산 Softmax 중요도(가중치)로 변환 가중합 중요한 단어의 정보를 모아서 내 표현으로 만듦

인코더 1층의 구성 (한 층 기준):

- Input Embedding + Positional Encoding

→ 단어를 벡터로 변환하고, 위치 정보를 더함 - Multi-Head Self-Attention

→ 문장 안 단어들이 서로를 바라보며 관계 파악 (위에 정리한 내용) - Add & LayerNorm

→ 잔차 연결(residual)과 정규화(layer normalization)로 안정적인 학습

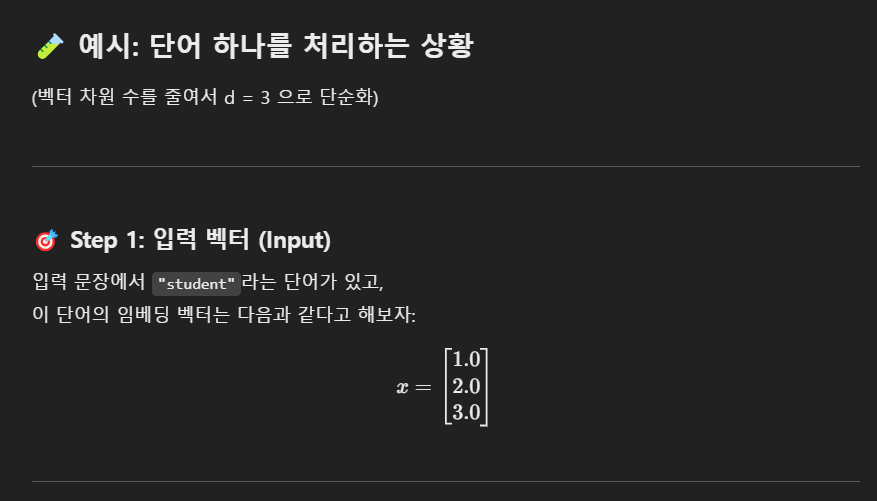

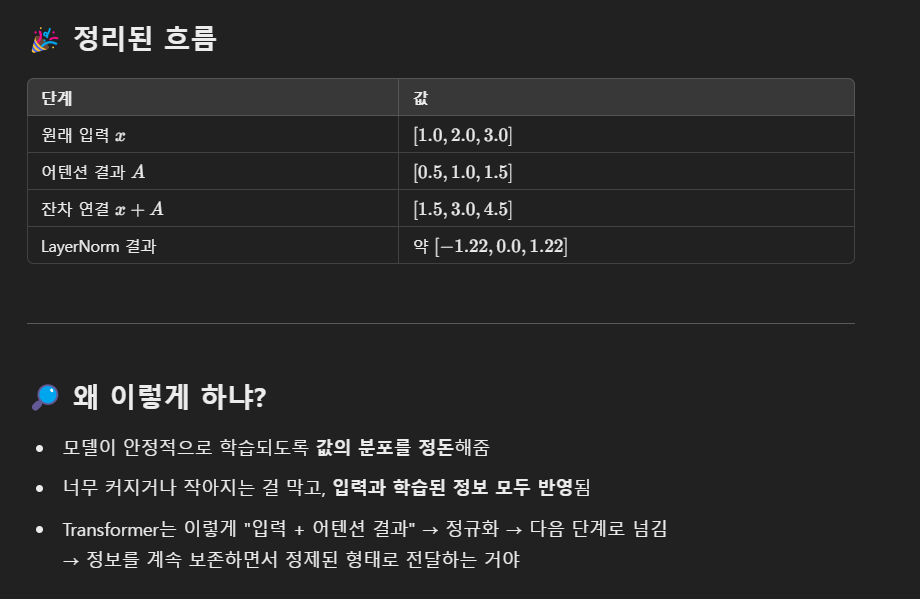

잔차연결과 정규화 예시

정리 - 잔차와 정규화를 하는 이유

트랜스포머에서 멀티헤드 어텐션은 문맥을 반영한 새로운 표현을 만들어내지만,

이 과정에서 원래 입력 벡터의 구조나 정보가 과하게 변형되거나 손실될 위험이 있다.

이를 보완하기 위해 잔차 연결(Residual Connection)을 사용하여 어텐션의 출력에

원래 입력을 더해줌으로써 모델이 문맥 정보와 입력 자체의 정보를 함께 유지할 수 있도록 한다.

이렇게 더해진 결과는 값의 분포가 불안정할 수 있기 때문에,

이어서 레이어 정규화(Layer Normalization)를 적용해 평균 0, 분산 1로 정돈함으로써

학습의 안정성과 수렴 속도를 높인다. 이 두 구성은 트랜스포머가 깊은 층에서도

정보 손실 없이 의미 있는 표현을 효과적으로 학습할 수 있도록 돕는 핵심 메커니즘이다.

4. Feed Forward Network (FFN)

→ 각 단어의 표현을 비선형적으로 확장 (2-layer MLP)

🔧 Feed Forward Network(FFN)의 구성 다시 보기

트랜스포머의 FFN은 사실상 아주 간단한 2층짜리 신경망이야.

딥러닝에서 흔히 쓰는 MLP (Multi-Layer Perceptron) 구조고,

각 단어(벡터)에 대해 개별적으로 동일한 FFN을 적용해.

📌 요약 흐름

단어 하나하나에 대해 이 과정을 거치고,

결과는 Self-Attention이 반영된 후 한층 더 의미적으로 변형된 표현이 되는 거야.

※ 트랜스포머 인코더는 동일한 구조의 층을 여러 개 반복하도록 설계되어있다. 이렇게 작업을 수행하려면

각 층의 입력과 출력 차원이 같아야 다음 층에 그대로 넣을 수 있다.

5. Add & LayerNorm (또 한 번)

→ 다시 잔차 연결과 정규화

이걸 N번 반복 (보통 6층) → 인코더 전체 완성!

다시한번 인코더 부분 정리

🧠 Transformer 인코더 정리

Transformer 인코더는 입력 문장을 문맥을 반영한 풍부한 표현 벡터로 변환하는 구조로,

동일한 형태의 블록(layer)을 여러 층(N층) 반복해서 구성된다.

각 인코더 블록은 다음과 같은 순서로 동작한다:

① 입력 임베딩 + 위치 인코딩 (Positional Encoding)

먼저 입력 문장의 각 단어는 고정된 크기의 벡터로 임베딩되며,

Transformer는 순서를 처리할 능력이 없기 때문에 각 단어에

위치 정보를 더하는 Positional Encoding을 적용한다.

이렇게 해서 만들어진 입력 벡터들이 인코더의 첫 번째 블록으로 들어간다.

② Multi-Head Self-Attention

입력된 단어 벡터들은 서로를 "바라보며" 문맥 정보를 주고받는다.

각 단어는 Query, Key, Value 벡터로 변환되어,

모든 단어들과의 유사도(중요도)를 계산하고 그에 따라 정보를 가중합한다.

이 과정을 여러 개의 헤드(head)에서 병렬로 수행하면,

서로 다른 관점에서 단어 간 관계를 학습할 수 있고

이 결과들을 이어붙여 문맥이 반영된 표현을 만든다.

③ 잔차 연결(Residual Connection) + 레이어 정규화(Layer Normalization)

Self-Attention의 출력은 입력 벡터와 더해져(잔차 연결),

원래 정보가 소실되지 않도록 보존된다.

이후 정규화 과정을 거쳐 값의 분포를 안정화시켜,

학습이 더 빠르고 안정적으로 진행되도록 한다.

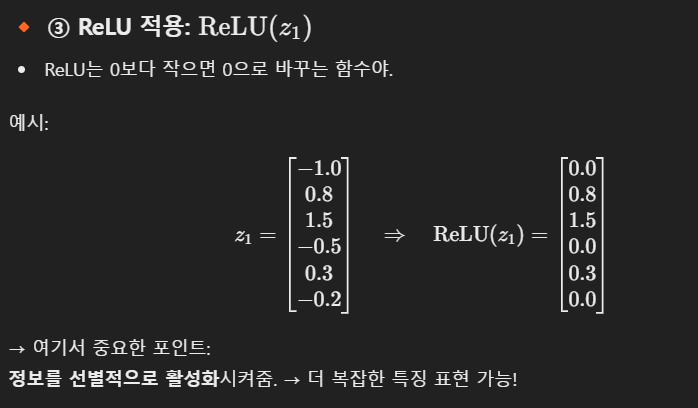

④ Feed Forward Network (FFN)

문맥 정보를 반영한 각 단어 벡터는

개별적으로 2층짜리 완전연결 신경망을 통과한다.

먼저 차원을 확장하여 비선형 활성화(ReLU)를 적용하고,

다시 원래 차원으로 줄이면서 더 추상적인 표현 벡터로 변환된다.

이 과정은 단어 하나하나에 독립적으로 적용된다.

⑤ 다시 잔차 연결 + 레이어 정규화

FFN의 출력에도 입력을 더하고,

레이어 정규화를 적용하여 값을 안정화시킨다.

이 과정을 통해 각 단어 벡터는 문맥과 고유 정보를 모두 담은 상태로 다음 블록으로 전달된다.

✅ 인코더 요약 구조 (한 층 기준):

Input → [Multi-Head Self-Attention]

→ Add & LayerNorm

→ Feed Forward Network

→ Add & LayerNorm

→ Output → 다음 층으로

이 구조가 N번 반복되면 인코더 전체가 완성되고,

최종 출력은 각 단어에 대한 문맥-aware 벡터 표현이 된다.

🔸 3. Decoder: 번역 결과를 생성(여기 부터는 다음 편에서 다루)

Decoder도 Encoder와 유사한 구조지만, 다음과 같은 차이점이 있다:

- Masked Multi-Head Attention: 디코더는 출력 문장을 한 단어씩 생성한다.

학습 시에는 "나는 학생이다" 전체 문장을 이용하되,

예를 들어 "나는"을 입력했을 때 앞 단어까지만 보고 다음 단어를 예측하도록 마스크를 씌운다.

즉, "학생"이라는 정답은 가려두고 "나는"만 보고 "학생"을 예측해야 한다. - Encoder-Decoder Attention: 입력 문장의 정보를 참고해 출력 단어를 생성한다.

"나는"이라는 단어를 생성할 때, Encoder가 분석한 "I am a student"의 의미를 같이 고려한다. - Feed-Forward Network: 예측된 정보들을 최종적으로 변환한다.

🎯 4. Linear + Softmax → 확률 예측

Decoder에서 나온 출력은 Linear Layer를 통해 단어 사전 크기의 벡터로 변환되고,

Softmax를 통해 다음 단어로 어떤 단어가 나올 확률이 높은지를 계산한다.

예를 들어, 다음 단어로 "학생"일 확률이 0.82, "선생"일 확률이 0.05, "의사"일 확률이 0.01 같은 식으로

확률 분포를 생성한 뒤, 가장 높은 단어를 선택해 출력한다.

📚 학습 방식

Transformer는 지도학습(Supervised Learning) 기반으로 학습되기 때문에,

반드시 입력과 정답 출력 쌍이 매칭된 데이터셋이 필요하다.

예시:

입력 (Input) 출력 (Target)

| "I am a student" | "나는 학생이다" |

| 🖼️ 이미지 (강아지 사진) | "강아지가 풀밭에서 뛴다" |

학습 중에는 디코더에 출력 문장을 한 칸씩 밀어서 입력(?)하고,

각 시점마다 다음 단어를 예측하는 방식으로 학습이 이뤄진다.

✅ 요약

- Transformer는 RNN/CNN의 한계를 극복한 완전 어텐션 기반 모델이다.

- 입력과 출력을 동시에 병렬적으로 처리할 수 있어 학습이 빠르고, 긴 문장에서도 효과적이다.

- 구조는 Encoder-Decoder로 나뉘며,

각 층은 Multi-Head Attention + Feed Forward + LayerNorm + Residual로 구성된다. - Self-Attention은 단어 간 관계를 파악하고, Multi-Head는 다양한 시각으로 이를 강화한다.

- 학습 시에는 입력-출력 쌍이 필요하며, 출력은 Softmax를 통해 단어 사전 전체에 대한 확률 분포로 생성된다.